Google Cloud SQL - Import 100k+ Records to MySQL

Learn how to import 100k+ records into your MySQL Instance on Google Cloud

I realized the scope in which the number of records to be inserted into my database, two million. The use case exploded reaching the upper bounds of campaigns that could be inserted into the Google Ads API per an ad account. The number of records I'd need to create easily broke the 1MB limit per document offered by Firestore. If each mutation stored as a document, the number of reads would break read thresholds and start charging based on the number of reads and writes.

While I generally enjoy the use of Firestore for the real time and document change events in web and mobile applications, I required an import to push hundreds of thousands of records more quickly than the timeout of an http request. Thus this lead me down the path to MySQL on Google Cloud SQL to handle the change asynchronously.

Goals

- Create a Storage Bucket with SQL permissions to Bucket

- Create the CSV File - NodeJS

- Push to Storage Bucket

- Request for Importing CSV

- Poll for Changes when the Operation Completes

Assumptions:

Google Cloud SQL MySQL instance has previously been set up, NodeJs, knowledge of TypeScript.

Prerequisites:

https://www.npmjs.com/package/googleapis

Create Storage Bucket with Permissions

In order for the Google Cloud to import a CSV file, the Cloud SQL Admin API requires the usage of a storage bucket for the import.

First, collect the service account that's used for MySQL Instance.

Next go to the Cloud Storage and click the Create Bucket button and create the bucket. After creation, the service account needs permissions to access the bucket. Click the bucket name created and select the Permissions tab. Click the Add button with user icon next to it. Add the service account tied the MySQL instance. Next add the role for Storage Object Viewer.

Create CSV File and Push to Storage

MySQL allows for a couple of file types to import en masse, CSV and SQL. I chose CSV as csv-writer eased the creation of a CSV from POJOs.

Install the csv-writer and @google-cloud/storage package by running npm install csv-writer @google-cloud/storage --save.

I plucked the following code from one of my projects, but it's generic enough to be used in other applications. It writes to the storage stream via a delegate provided by the consumer.

Writing the records to the stream with csv-writer can be accomplished by using the delegate.

Once the csv file uploads to storage, the saveCsvImport file returns a promise with reference to the bucket path.

If you're denied to creating the csv, ensure the GOOGLE_APPLICATION_CREDENTIALS environment variable points to a json file for a IAM user with a role that can write to cloud storage. From the graphic below, click the key to the left of the service account name. This will download a json key file Google Cloud Libraries use for authentication. KEEP THIS FILE PRIVATE! DON'T ADD TO SOURCE CONTROL!

Request Cloud SQL to Import CSV

No specific role exists for the permissions needed to import and read the CloudSQL instances. In my experience, I granted the role Cloud SQL Admin. For a service account that runs on App Engine, the principle of least privilege broke. Viewing analysis provided by Google Cloud, I consumed two permissions: cloudsql.instances.import and cloudsql.instances.get. No role presently exists exclusive to those permissions, so I created a new role Cloud SQL Import.

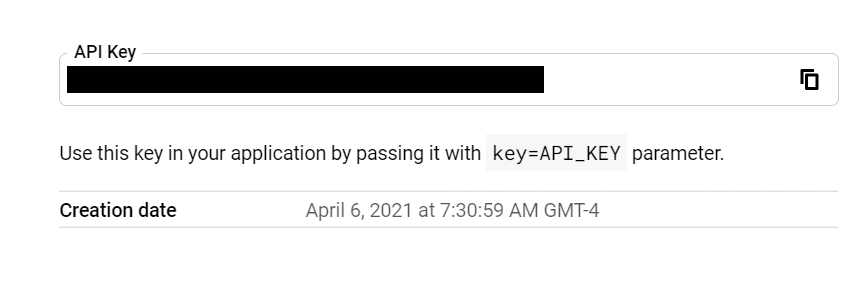

Another configuration requires attention before the ability to request changes for the SQL Admin API. In the API & Services credentials, click Create Credentials and choose API Key. The scope of the API key needs no further access than the Google SQL Admin API.

The key can be found in the upper right hand side of the screen.

The client needs to be authorized to execute import scoped to Google Cloud platform.

Once reference to the client has been established, the sqlAdmin client allows the ability to import the CSV file. For this demonstration, the import inserts records to a specific table in the defined database. The column name order needs to mirror the order in the CSV file.

Since the scope of this is to import, the interface for ISqlOperation excludes the backup and export context. Next, send the request to import to CloudSQL.

After making the request for import, a response of type ISqlOperation. Polling the status of the operation every five seconds to check the status can be accomplished like below.

Note, retrieving the status of the operation presently does not include failures of the operation for sqladmin_v1beta4.

As a note, Google Cloud SQL allows one operation per SQL Instance to run.

Summary

Google Cloud SQL's admin API allows the ability to import hundreds of thousands of records via a CSV or SQL file. The import operation performed depends on the use of Cloud Storage for import. Permissions specific to the folder for the Cloud SQL user requires configuration on the bucket to view the storage objects. Polling the status of the operation can be done utilizing RxJs until its complete.